The trailers for Ben Stiller's Mitty (which looks pretty awful, in truth) remind me that the irresistible rise of Grant Shapps to the heights of Conservative Party Chairman has been one of the more amusing sub-plots of the coalition government. The arch-fantasist has become the champion of small capital, not to mention the hammer of Labour and the BBC. If you wanted to construct a fictional dodgy-dealer - part Arthur Daley, part Kim Dotcom - to give the small business sector a bad name, then you'd probably reject Shapps's history of inept content-scraping, sock-puppetry and multiple pseudonyms as way too implausible. Though he escaped prosecution for fraud, he is clearly an unscrupulous idiot with a cavalier attitude towards the truth. He makes the delusional psychosis of Jeffrey Archer, a former Tory Chairman, appear almost benign.

Shapps's fast-and-loose style is in evident in his latest thoughts on the positive role of small businesses, where he expands on the traditional myth that SMEs power the economy (they don't) to claim that they are also the facilitators of social mobility: "[it is] flourishing businesses – the opportunity of owning, running or working in one – that ultimately help people to escape the circumstances of their birth" (that use of the cliché "circumstances of their birth" had me thinking fondly of the Master of Granchester). In support of this bold claim, he refers to a World Bank study that links "the rise of small business ownership among women in India with greater legal and political rights" and also points to Eastern Europe, "where small firms are repairing the damage left by decades of socialist stagnation".

No link is provided for the Indian "study", though it is likely (if it exists) to relate to the growth of micro-finance. Though this can provide the seed-corn for a new business, most loans are used for consumption - i.e. this is mainly micro-credit rather than capital investment. The growth of the Indian economy over the last thirty years has been powered by globalisation, beneficial demographics, and improvements in productivity. Flourishing SMEs are a consequence, not a cause. The claim in respect of Eastern Europe is simplistic, ignoring the existence of small businesses in Hungary and Yugoslavia before 1989, as well as the mixed economic record since. The reparative contribution of SMEs in Russia in the 90s was negligible, in the face of oligarchic looting, while the story of the former GDR is of West German corporations buying up and often dismantling large businesses, with the countervailing growth of SMEs dependent on state-directed investment, notably via the KfW development bank.

Shapps's fairy story also ignores the reality of how small businesses are formed. While some skilled workers are able to translate their experience (often acquired working for a large business) into self-employment, this rarely leads to the employment of others. 75% of the businesses in the UK in 2012 had zero employees (see table 1, page 3). SMEs with employees tend to require working capital to start and grow, which immediately gives an advantage to the already wealthy or those with access to credit (i.e. possessing assets for collateral or having guarantors). The stirring tale of "entrepreneurs and inventors who work all hours in their own garage [and] ex-apprentices who start out with a mobile business" is a consoling fiction for wage-slaves. Most SMEs fail, and those that start without premises or in a garage tend to fail faster.

To be fair to Shapps, even Labour MPs are suckers for tales of the little guy beating the odds: "There are countless examples of working-class people climbing a ladder of opportunity through high street businesses that have gone on to become international success stories". They seem unable to understand that once a business expands it denies market share to others. An "international success story" is usually a chain store that drives out local variety. This is not necessarily a bad thing - so long as the better drives out the worse - but it does not grow the population of successful entrepreneurs who started out as market traders, it actually shrinks it.

Small businesses are inherently inimical to social mobility, if viewed from the perspective of someone seeking to advance from the working class to the middle class, though they can provide a route for progression through the various levels of the middle class - i.e from the petit bourgeois to the bourgeois, in classical terms. A talented working class kid is more likely to progress to the middle class by working for a large company, e.g. by reaching middle management or a professional role, than by working for or founding a small business. The great enabler of social mobility in the 1945-75 era was the expansion of the public sector.

At times, Shapps's chutzpah can only be admired. For example: "Businesses create every penny of the wealth we need to pay for our nation's schools, our NHS and our pensions". The obvious rejoinder is that wealth is created by people, i.e. workers, not by businesses. Businesses create nothing, because that is not their purpose. Their function as legal entities is to distribute wealth. This is why company law deals with shares, dividends, limited liability, and social obligations such as tax and externalities like pollution. This is not a pedantic point. By privileging businesses (wealth distributors) over workers (wealth creators), we privilege business owners (capitalists) who are in a position to secure the lion's share of profit.

Shapps ends his eulogy thus: "Conservatives don't love business for some abstract reason. We love it because of what it offers our children. Hope". If you substituted "inheritance" for the last word, you'd have a more honest statement of the Shappsian worldview.

Search

Sunday, 29 December 2013

Tuesday, 24 December 2013

Blood and Honour

The consensus is that last night's game against Chelsea won't live long in the memory. A combination of difficult weather, a negative opposition and some indulgent refereeing made it an unattractive spectacle. Putting John Terry on the pitch was just rubbing salt in the wound. The only fun to be had was the subsequent sight of Jose Mourinho auditioning for UKIP, with his "English blood is best" speech. If Sam Allardyce came out with that, he'd be crucified. Of course, the Portugeezer's chief attraction for many is not his ability to grind out the points, but his talent for distraction, not least in respect of the failings of owners and players. Chelski were thuggish on the field and off it (their fans singing about 96 dead scousers was particularly classy). Mourinho's jibe that Arsenal players "cry" was bizarre - the only time a player kicked the ball out of play was when Ramsey did so after Ramires collapsed in a heap. Of course, bullies always see compassion as weakness.

17 games in is just short of the half-way mark, but I thought an update on my clairvoyant powers would be appropriate now, before the commentariat herd gather at the halfway waterhole. Liverpool and Arsenal are on 36 points. A straight-line extrapolation would suggest that the champions will finish on 80 points, which is in line with my prediction after 11 games. At this stage last season, Manure had 42 points, while City were second on 36 and we were on 27. We then produced 46 points from the remaining 21 games, which, if repeated, would deliver a total points haul of 82. In the 2011-12 season, City were top with 44 and Manure second on 42. We made 38 points from the remaining 21 games. If repeated, that form would get us to a total of 74, which would at least ensure another season in the Champions League. I think we can dare to dream, so I'm still predicting a final tally of 78-80 points.

While there are plenty of tough periods ahead - Liverpool and Manure back to back in February and Chelsea and Man City back to back in March - I think we'll do reasonably well in these "big games", probably 6 points from 12, which will keep us on track. We owe both the Manchester teams a strong performance at the Emirates, while our track record at Anfield and Stamford Bridge has improved in recent years. The cups are likely to be less of a distraction than usual. Few expect us to progress against Bayern, though I fancy us to squeak through on away goals, which will be deliciously ironic given Wenger's recent comments, probably by winning 1-0 at home and losing 2-1 away. The FA Cup is in the lap of the comedy gods. Spurs under Tim Sherwood may discover their roots, which means beating us (Adebayor to score), getting to the final and losing, and finishing mid-table.

In terms of the squad, a number of players have seen a dip in their performance over recent weeks (Giroud, Ramsey, Wilshere and Ozil), while others are only starting to get back to their best (Walcott and Cazorla). Podolski and Oxlade-Chamberlain have yet to get their season started. It was interesting that Wenger chose not to make any substitutions last night, suggesting that he doesn't think any players are approaching the "red zone" of exhaustion yet. As the training regime is geared to reaching peak fitness and stamina in the New Year, this suggests that we're in good physical shape. I suspect Ozil may get a couple of games off over the next fortnight to recharge his batteries, while Podolski should allow Giroud to limit himself to 60 minutes for a few matches. Once we get past game 19, transfer speculation will go into over-drive, which might be the Frenchman's cue to start banging in the goals again.

Overall, we should be pleased with the way that the season has gone so far. We're better than we were last year, and there are signs that we can be better still. 2 points from the last 9 is obviously disappointing, but against tough opponents we were only outplayed once, and the 6-3 scoreline against Man City reflected an atypically poor defensive display by us rather than their habitual free-scoring. They look like the one team that could draw away from the chasing pack, but they also look vulnerable at the back - a classic City team, in other words. They, rather than Manure, Chelski or Liverpool, remain the obvious threat to our ambitions. If we're still in the mix come our return fixture on the 29th of March, then we'll have our destiny in our own hands.

There's a definite retro air to the Premier League at present: ugly Chelski, inconsistent Citeh, efficient Liverpool, struggling Manure, tough Everton, laughing-stock Spuds. Arsenal look like a squad that is gelling, with an improving defence, an inventive midfield and an under-appreciated striker. It's just like 1989 all over again. The shit-for-brains Chelsea fans who sang about Hillsborough don't know what spirits they may be invoking. My money's on the honourable Aaron Ramsey to provide the Michael Thomas moment when we get down to the wire.

17 games in is just short of the half-way mark, but I thought an update on my clairvoyant powers would be appropriate now, before the commentariat herd gather at the halfway waterhole. Liverpool and Arsenal are on 36 points. A straight-line extrapolation would suggest that the champions will finish on 80 points, which is in line with my prediction after 11 games. At this stage last season, Manure had 42 points, while City were second on 36 and we were on 27. We then produced 46 points from the remaining 21 games, which, if repeated, would deliver a total points haul of 82. In the 2011-12 season, City were top with 44 and Manure second on 42. We made 38 points from the remaining 21 games. If repeated, that form would get us to a total of 74, which would at least ensure another season in the Champions League. I think we can dare to dream, so I'm still predicting a final tally of 78-80 points.

While there are plenty of tough periods ahead - Liverpool and Manure back to back in February and Chelsea and Man City back to back in March - I think we'll do reasonably well in these "big games", probably 6 points from 12, which will keep us on track. We owe both the Manchester teams a strong performance at the Emirates, while our track record at Anfield and Stamford Bridge has improved in recent years. The cups are likely to be less of a distraction than usual. Few expect us to progress against Bayern, though I fancy us to squeak through on away goals, which will be deliciously ironic given Wenger's recent comments, probably by winning 1-0 at home and losing 2-1 away. The FA Cup is in the lap of the comedy gods. Spurs under Tim Sherwood may discover their roots, which means beating us (Adebayor to score), getting to the final and losing, and finishing mid-table.

In terms of the squad, a number of players have seen a dip in their performance over recent weeks (Giroud, Ramsey, Wilshere and Ozil), while others are only starting to get back to their best (Walcott and Cazorla). Podolski and Oxlade-Chamberlain have yet to get their season started. It was interesting that Wenger chose not to make any substitutions last night, suggesting that he doesn't think any players are approaching the "red zone" of exhaustion yet. As the training regime is geared to reaching peak fitness and stamina in the New Year, this suggests that we're in good physical shape. I suspect Ozil may get a couple of games off over the next fortnight to recharge his batteries, while Podolski should allow Giroud to limit himself to 60 minutes for a few matches. Once we get past game 19, transfer speculation will go into over-drive, which might be the Frenchman's cue to start banging in the goals again.

Overall, we should be pleased with the way that the season has gone so far. We're better than we were last year, and there are signs that we can be better still. 2 points from the last 9 is obviously disappointing, but against tough opponents we were only outplayed once, and the 6-3 scoreline against Man City reflected an atypically poor defensive display by us rather than their habitual free-scoring. They look like the one team that could draw away from the chasing pack, but they also look vulnerable at the back - a classic City team, in other words. They, rather than Manure, Chelski or Liverpool, remain the obvious threat to our ambitions. If we're still in the mix come our return fixture on the 29th of March, then we'll have our destiny in our own hands.

There's a definite retro air to the Premier League at present: ugly Chelski, inconsistent Citeh, efficient Liverpool, struggling Manure, tough Everton, laughing-stock Spuds. Arsenal look like a squad that is gelling, with an improving defence, an inventive midfield and an under-appreciated striker. It's just like 1989 all over again. The shit-for-brains Chelsea fans who sang about Hillsborough don't know what spirits they may be invoking. My money's on the honourable Aaron Ramsey to provide the Michael Thomas moment when we get down to the wire.

Friday, 20 December 2013

The Big Boy Made Me Do It

Tuesday's meeting between Barack Obama and the technology companies was an object lesson in corporate manipulation. The hot topic was always going to be government surveillance, given a federal judge's ruling on Monday that the NSA's activities were probably illegal, but it suited the companies' purpose to claim that Obama's decision to start the session by announcing the appointment of a senior Microsoft guy to help with the healthcare.gov site, i.e. an inspiring example of cooperation, was an attempt to avoid the subject.

The reality was a guest list, prepared by the White House, that included almost no companies that could meaningfully contribute to a debate on a systems integration challenge. Microsoft, who could, sent their chief legal officer, while Oracle and Cisco, who had attended the first "tech summit meeting" in 2011, were absent. It strains belief to think that the White House was dumb enough to imagine the best brains to pick in respect of healthcare.gov would be the CEOs of Apple, Twitter, Yahoo, Google, AT&T, Facebook, LinkedIn, Dropbox, Netflix etc. If they really wanted to hijack the meeting, they'd have done better to invite Larry Ellison back. The NSA fallout was always going to be the main topic of debate.

The media spinning was intended to suggest that Obama is being dragged reluctantly to the negotiating table by Silicon Valley, this despite the fact that a Presidential panel would report on Wednesday recommending curbs on the NSA. The New York Times quoted the non-attending CEO of CloudFlare, a website optimisation company, to the effect that both "sides" were suffering from a loss of trust: "If you’re on the White House side, the issue is they’re getting beaten up because they’re seen as technically incompetent. On the other side, the tech industry needs the White House right now to give a stern rebuke to the N.S.A. and put in real procedures to rein in a program that feels like it’s out of control." The suggestion is that the tech industry is making the running on curtailing surveillance abuses, rather than being an accessory to the crime.

The relentless propaganda of the right, endorsed by Silicon Valley ideologues, is that government cannot be trusted to act in the best interests of citizens and that it is inherently incompetent. In contrast, private businesses spend a lot of advertising money extolling their own trustworthiness and insisting that the need to serve customers keeps them honest (their anxiety about reputational damage is largely PR, as customers rarely exercise effective sanction). The Snowden revelations have given the lie to this, both in the evidence of industry connivance and the competency of government. The current campaign seeks to paint a picture of a freedom-loving industry facing-off against an intrusive state. David bullied by Goliath.

Following the meeting, the tech titans issued a statement to a grateful world: "We appreciated the opportunity to share directly with the president our principles on government surveillance that we released last week and we urge him to move aggressively on reform". Those high-minded "principles" naturally do not question the rights of the technology companies to exploit personal data, and even go so far as to insist that states should harmonise "conflicting laws" and reject "Balkanisation" (i.e. support global monopolies). In other words, the usual neoliberal agenda. The peremptory nature of the wording ("The undersigned companies believe that it is time for the world’s governments to address the practices and laws regulating government surveillance of individuals and access to their information") is telling. They'll be demanding a Nobel Peace Prize next. After all, Obama's got one.

While there is a sense that the debate in the US is predicated on the assumption that people are idiots who can't distinguish between the rights of citizens and self-interested corporations, this remains a significant improvement on the level of the debate in the UK, where the attitude of the state is that we are not merely stupid but untrustworthy too. Alan Rusbridger, the well-known country-lover, highlights the irony: "This muted debate about our liberties – and the rather obvious attempts to inhibit, if not actually intimidate, newspapers – have puzzled Americans, Europeans and others who were brought up to regard the UK as being the cradle of free speech and an unfettered press". Of course, Rusbridger is a romantic liberal, so he actually believes in such unicorns as "an unfettered press".

The crudeness of the British state's attitude towards digital surveillance, exemplified in the view of the former Head of GCHQ that the security services should not be accountable to Parliament, is not the result of the lack of a constitution or formal rights, but the consequence of the irrelevance of the domestic IT industry (Silicon Roundabout isn't demanding a summit with David Cameron) and the waning power of the press. Rupert Murdoch's baleful legacy is not simply page 3 and phone-hacking but the corrupt interconnectedness of journalism, police and politics. The press has become an ever more obliging propaganda arm of government, and exposés of expense-fiddling parliamentarians are little more than spiteful attempts to assert their nominal independence.

The purpose of Tuesday's meeting was to get the US government to agree to rein in state surveillance, but not to go so far as to challenge the right of the technology companies to exploit personal data. Neither "side" wishes to jeopardise Silicon Valley's global dominance, though they recognise that some concessions will have to be made to neoliberal power blocs elsewhere, notably the EU. Once a satisfactory protocol is agreed, the "debate about our liberties" will become as muted in the US and Europe as it is in the UK today. Our proud boast in Britain is that we are "open for business", which means that we have no significant domestic Internet industry to champion and an aversion to prosecuting multinational technology companies for tax dodging. We are well ahead of the game in terms of neoliberal compliance.

The reality was a guest list, prepared by the White House, that included almost no companies that could meaningfully contribute to a debate on a systems integration challenge. Microsoft, who could, sent their chief legal officer, while Oracle and Cisco, who had attended the first "tech summit meeting" in 2011, were absent. It strains belief to think that the White House was dumb enough to imagine the best brains to pick in respect of healthcare.gov would be the CEOs of Apple, Twitter, Yahoo, Google, AT&T, Facebook, LinkedIn, Dropbox, Netflix etc. If they really wanted to hijack the meeting, they'd have done better to invite Larry Ellison back. The NSA fallout was always going to be the main topic of debate.

The media spinning was intended to suggest that Obama is being dragged reluctantly to the negotiating table by Silicon Valley, this despite the fact that a Presidential panel would report on Wednesday recommending curbs on the NSA. The New York Times quoted the non-attending CEO of CloudFlare, a website optimisation company, to the effect that both "sides" were suffering from a loss of trust: "If you’re on the White House side, the issue is they’re getting beaten up because they’re seen as technically incompetent. On the other side, the tech industry needs the White House right now to give a stern rebuke to the N.S.A. and put in real procedures to rein in a program that feels like it’s out of control." The suggestion is that the tech industry is making the running on curtailing surveillance abuses, rather than being an accessory to the crime.

The relentless propaganda of the right, endorsed by Silicon Valley ideologues, is that government cannot be trusted to act in the best interests of citizens and that it is inherently incompetent. In contrast, private businesses spend a lot of advertising money extolling their own trustworthiness and insisting that the need to serve customers keeps them honest (their anxiety about reputational damage is largely PR, as customers rarely exercise effective sanction). The Snowden revelations have given the lie to this, both in the evidence of industry connivance and the competency of government. The current campaign seeks to paint a picture of a freedom-loving industry facing-off against an intrusive state. David bullied by Goliath.

Following the meeting, the tech titans issued a statement to a grateful world: "We appreciated the opportunity to share directly with the president our principles on government surveillance that we released last week and we urge him to move aggressively on reform". Those high-minded "principles" naturally do not question the rights of the technology companies to exploit personal data, and even go so far as to insist that states should harmonise "conflicting laws" and reject "Balkanisation" (i.e. support global monopolies). In other words, the usual neoliberal agenda. The peremptory nature of the wording ("The undersigned companies believe that it is time for the world’s governments to address the practices and laws regulating government surveillance of individuals and access to their information") is telling. They'll be demanding a Nobel Peace Prize next. After all, Obama's got one.

While there is a sense that the debate in the US is predicated on the assumption that people are idiots who can't distinguish between the rights of citizens and self-interested corporations, this remains a significant improvement on the level of the debate in the UK, where the attitude of the state is that we are not merely stupid but untrustworthy too. Alan Rusbridger, the well-known country-lover, highlights the irony: "This muted debate about our liberties – and the rather obvious attempts to inhibit, if not actually intimidate, newspapers – have puzzled Americans, Europeans and others who were brought up to regard the UK as being the cradle of free speech and an unfettered press". Of course, Rusbridger is a romantic liberal, so he actually believes in such unicorns as "an unfettered press".

The crudeness of the British state's attitude towards digital surveillance, exemplified in the view of the former Head of GCHQ that the security services should not be accountable to Parliament, is not the result of the lack of a constitution or formal rights, but the consequence of the irrelevance of the domestic IT industry (Silicon Roundabout isn't demanding a summit with David Cameron) and the waning power of the press. Rupert Murdoch's baleful legacy is not simply page 3 and phone-hacking but the corrupt interconnectedness of journalism, police and politics. The press has become an ever more obliging propaganda arm of government, and exposés of expense-fiddling parliamentarians are little more than spiteful attempts to assert their nominal independence.

The purpose of Tuesday's meeting was to get the US government to agree to rein in state surveillance, but not to go so far as to challenge the right of the technology companies to exploit personal data. Neither "side" wishes to jeopardise Silicon Valley's global dominance, though they recognise that some concessions will have to be made to neoliberal power blocs elsewhere, notably the EU. Once a satisfactory protocol is agreed, the "debate about our liberties" will become as muted in the US and Europe as it is in the UK today. Our proud boast in Britain is that we are "open for business", which means that we have no significant domestic Internet industry to champion and an aversion to prosecuting multinational technology companies for tax dodging. We are well ahead of the game in terms of neoliberal compliance.

Tuesday, 17 December 2013

No More Triumphs

David Cameron has apparently declared "mission accomplished" for British troops in Afghanistan. Pedants might point out that he was responding to a journalist's prompt, so the term is a plant with an eye to a headline, but this is incidental. The meaning of the spectacle is captured in the use of the word "declared", with its cricketing connotations of the captain's judgement. The Prime Minister has decided that we have done enough and it is time to quit the field. Just to reinforce the point, he was even accompanied by a celebrity retiree in the person of Michael Owen.

In an earlier age, the term "mission accomplished" would have been voiced by a military leader reporting to the civil power that had commissioned him. This was not merely confirmation that the will of the state had been successfully executed, but that the powers temporarily vested in the military had now been formally returned. The model for this was the practice in classical Greece and Republican Rome of treating warfare as an episodic activity requiring limited sanction. In the modern era of citizen armies, conscription and demobilisation became the ceremonies that marked these power exchanges, with triumphal processions and laurel wreaths going out of fashion in democracies in favour of impromptu kissing couples in public squares.

The ragged wars of the post-1945 period could not be characterised as successes by the West, and usually lacked call-ups and demobs, so there were few opportunities to claim "mission accomplished" until Thatcher decided to buck the trend with a Roman triumph as the armed forces marched into the City in 1982 to celebrate victory in the Falklands. But if that was a throwback in style, it also marked a return to the celebration of political will as much as military accomplishment. For all the praise of the fallen and the scarred, the chief message was clearly "we were right, and don't anyone say otherwise". The triumph was less a military conclusion than a political validation.

This neocon fashion reached the height of unintentional parody in 2003 when George W Bush did his Top Gun impression on the USS Abraham Lincoln beneath a banner with the legend "Mission Accomplished". The irony was that the military (rightly) did not consider that the mission in Iraq was anywhere near accomplished. The White House subsequently claimed that the banner's meaning was taken out of context, but their failure to spot the obvious hostage to fortune was a pretty good indication of both their hubris and the casual contempt for facts in the "war on terror".

Cameron's declaration is less vainglorious, but it is no less of a stunt. It is not difficult to make the case that the original objectives of Britain's involvement in Afghanistan have not been met, but the government has now reframed the brief to match the current reality, specifically bumping the Taliban training camps into Pakistan. According to the Prime Minister: "the purpose of our mission was always to build an Afghanistan and Afghan security forces that were capable of maintaining a basic level of security so this country never again became a haven for terrorist training camps". Job done. Let's get out of here before that "basic level" crumbles.

Given the current cuts in military spending, and the determination of most politicians to spunk an increasing slice of what is left on a Trident replacement, not to mention the emerging money-pits of cyber-warfare and drones, British troops aren't likely to see much action over the next decade or so (bumping the al-Qaida franchise out of Syria will be pursued via proxies). The current sentimental mood of Help for Heroes and tabloid-sponsored award ceremonies will linger on, but we'll gradually revert to the British tradition of seeing squaddies as crypto-hooligans whom it's best to steer clear of.

Just as Obama's kill-list symbolises the increasing bureaucratisation of war (and the militarisation of the bureaucracy), Cameron's downbeat triumph symbolises the increasingly trivial nature of the decisions taken to enter and exit wars. It's just business. With the ever-present background hum of total surveillance and cyber-warfare already in place, we are now in an era of permanent, low-level conflict, preferably at arms-length. The exceptional powers of the military have been abrogated by the state as a none-too-subtle way to bypass democracy. In such a world, we have no need of triumphs.

In an earlier age, the term "mission accomplished" would have been voiced by a military leader reporting to the civil power that had commissioned him. This was not merely confirmation that the will of the state had been successfully executed, but that the powers temporarily vested in the military had now been formally returned. The model for this was the practice in classical Greece and Republican Rome of treating warfare as an episodic activity requiring limited sanction. In the modern era of citizen armies, conscription and demobilisation became the ceremonies that marked these power exchanges, with triumphal processions and laurel wreaths going out of fashion in democracies in favour of impromptu kissing couples in public squares.

The ragged wars of the post-1945 period could not be characterised as successes by the West, and usually lacked call-ups and demobs, so there were few opportunities to claim "mission accomplished" until Thatcher decided to buck the trend with a Roman triumph as the armed forces marched into the City in 1982 to celebrate victory in the Falklands. But if that was a throwback in style, it also marked a return to the celebration of political will as much as military accomplishment. For all the praise of the fallen and the scarred, the chief message was clearly "we were right, and don't anyone say otherwise". The triumph was less a military conclusion than a political validation.

This neocon fashion reached the height of unintentional parody in 2003 when George W Bush did his Top Gun impression on the USS Abraham Lincoln beneath a banner with the legend "Mission Accomplished". The irony was that the military (rightly) did not consider that the mission in Iraq was anywhere near accomplished. The White House subsequently claimed that the banner's meaning was taken out of context, but their failure to spot the obvious hostage to fortune was a pretty good indication of both their hubris and the casual contempt for facts in the "war on terror".

Cameron's declaration is less vainglorious, but it is no less of a stunt. It is not difficult to make the case that the original objectives of Britain's involvement in Afghanistan have not been met, but the government has now reframed the brief to match the current reality, specifically bumping the Taliban training camps into Pakistan. According to the Prime Minister: "the purpose of our mission was always to build an Afghanistan and Afghan security forces that were capable of maintaining a basic level of security so this country never again became a haven for terrorist training camps". Job done. Let's get out of here before that "basic level" crumbles.

Given the current cuts in military spending, and the determination of most politicians to spunk an increasing slice of what is left on a Trident replacement, not to mention the emerging money-pits of cyber-warfare and drones, British troops aren't likely to see much action over the next decade or so (bumping the al-Qaida franchise out of Syria will be pursued via proxies). The current sentimental mood of Help for Heroes and tabloid-sponsored award ceremonies will linger on, but we'll gradually revert to the British tradition of seeing squaddies as crypto-hooligans whom it's best to steer clear of.

Just as Obama's kill-list symbolises the increasing bureaucratisation of war (and the militarisation of the bureaucracy), Cameron's downbeat triumph symbolises the increasingly trivial nature of the decisions taken to enter and exit wars. It's just business. With the ever-present background hum of total surveillance and cyber-warfare already in place, we are now in an era of permanent, low-level conflict, preferably at arms-length. The exceptional powers of the military have been abrogated by the state as a none-too-subtle way to bypass democracy. In such a world, we have no need of triumphs.

Friday, 13 December 2013

Walter Shows the Way

The big news in the world of wacky baccy this week was the decision of Uruguay to partially legalise marijuana, which has resulted in apparently sober calls for the nation to be awarded the Nobel peace prize (I love you too, man). Ultimately this is a sideshow. Perhaps of more significance is the suggestion that the UK government may look to regulate rather than ban synthetic drugs, though I suspect they'll pass up this opportunity in the short term. The emblematic role of drugs in the tabloid press (see Nigella Lawson) means that we'll probably be a late adopter as far as liberalisation or decriminalisation is concerned, but the fact that it is being considered indicates that a shift in attitude is under way. The key word is "regulate".

Perhaps the most overt sign of this change in popular culture has been Breaking Bad. Because of their length, TV drama series tend to require a lot of supporting comment - the auxiliary of constant blather intended to make you watch the programme and thus the adverts. Drama provides more grist for this than comedy, which suggests that the rise of social media has been decisive in creating the current "golden age of TV". With the exception of those aimed at knowing niche audiences (The IT Crowd, The Big Bang Theory), sitcom has been on the slide since 2006 and the birth of Twitter. If we'd imagined microblogging in the 90s, we'd probably have thought that the dissemination of jokes would be a killer app, but it turns out that the TV cheese for this particular wine is the traditional water-cooler guff of dramatic reveals and shouting at talent shows and politicians. It's reassuringly like Drury Lane in the eighteenth century.

Though much ink and many bytes are spent explicating the "narrative arc" and the moral quandaries of the central characters, the key meaning of these dramas can be found in the mise en scene, which doesn't tend to change much from beginning to end. Thus The Sopranos was a study of an SME in self-destructive and terminal decline, while The Wire looked more widely at institutional failure. Breaking Bad suggests that drugs might be a domestic manufacturing industry of the future, with a bit of luck. Despite the thick icing of morality and symbolic violence, all of these series are worrying away at industrial decline in the US and its social consequences. In Walter White's fall from grace as a chemistry teacher, there is a recognition that recreational drugs are the misapplication of a noble calling. The implication is that a small shift in the law (remember prohibition) could make this a respectable business.

The background to this spectacle is international economic negotiations, both the global efforts coordinated by the World Trade Organisation and regional initiatives such as the Transatlantic Trade and Investment Partnership and the Trans-Pacific Partnership. The WTO was created in 1995 as an institutional upgrade on GATT, the General Agreement on Tariffs and Trade, a rolling series of negotiations that was started in 1947 with the aim of avoiding the protectionism and autarky that had scarred the 1930s. Though GATT remains active, in the form of the outstanding Doha round, the global focus has long since shifted from the reduction of tariffs towards the harmonisation of regulations, notably in the areas of commercial services, intellectual copyright and foreign investment. The last GATT agreement before the creation of the WTO was, coincidentally, the Uruguay round, which ran from 1986 to 1994 (the duration, longer than the Congress of Vienna, is indicative of the scope and detail of these negotiations as much as the difficulty in securing agreement).

Despite the regular use of the words "trade" and "tariffs", and the implicit valorisation of "free trade", international agreements since the Uruguay round have had less and less to do with the traditional exchange of raw materials, agricultural produce and manufactured commodities. The objective in the neoliberal age has been to extend the rights of multinational corporations in the areas of intellectual property, investor-state dispute settlement (ISDS, i.e. the rights of foreign investors to trump domestic legislation), and the regulation of regulation (i.e. ensuring that domestic laws are harmonised to the satisfaction of global capital). As Dean Baker says, "the dirty secret about most trade negotiations today is that they aren’t really about 'conventional barriers to trade' any more. 'Non-tariff barriers', which get most of the attention in trade talks these days are a euphemism for differing national approaches to regulation".

While a lot of the criticism directed at these negotiations focuses on the anti-democratic implications of ISDS, the really big issue is intellectual property. The Trans-Pacific Partnership (TTP) is in large part targeted at extending US IP rights in South East Asia, where a large proportion of the world's "knock-offs" currently originate. But the scope of this goes beyond bootleg copies of The Hobbit. As Walter White has shown, we now have the technology to create knock-off drugs. As well as crystal meth, we can safely produce mildly psychoactive agents with minimal harmful effects (certainly less harmful than alcohol). If Big Pharma doesn't do this, then the market will be left to "unregulated" and "unscrupulous" producers in Vietnam and Mexico. It should be obvious that the gradual extension of IP rights is preparation for the decriminalisation of drugs, not to mention the ubiquity of high-profit GM.

There are many who cheer the Uruguay decision because it proposes nationalisation, rather than the regulation of a free market, and thus the adoption of a more socially-embedded response to the collateral damage of the drugs trade, but what they fail to appreciate is that this is only possible because there is no patent on cannabis or THC. In the future, the rights of nation states to manage their drug policy and direct their drug industries will be constrained by the rights of Big Pharma, who will own the "good stuff".

Perhaps the most overt sign of this change in popular culture has been Breaking Bad. Because of their length, TV drama series tend to require a lot of supporting comment - the auxiliary of constant blather intended to make you watch the programme and thus the adverts. Drama provides more grist for this than comedy, which suggests that the rise of social media has been decisive in creating the current "golden age of TV". With the exception of those aimed at knowing niche audiences (The IT Crowd, The Big Bang Theory), sitcom has been on the slide since 2006 and the birth of Twitter. If we'd imagined microblogging in the 90s, we'd probably have thought that the dissemination of jokes would be a killer app, but it turns out that the TV cheese for this particular wine is the traditional water-cooler guff of dramatic reveals and shouting at talent shows and politicians. It's reassuringly like Drury Lane in the eighteenth century.

Though much ink and many bytes are spent explicating the "narrative arc" and the moral quandaries of the central characters, the key meaning of these dramas can be found in the mise en scene, which doesn't tend to change much from beginning to end. Thus The Sopranos was a study of an SME in self-destructive and terminal decline, while The Wire looked more widely at institutional failure. Breaking Bad suggests that drugs might be a domestic manufacturing industry of the future, with a bit of luck. Despite the thick icing of morality and symbolic violence, all of these series are worrying away at industrial decline in the US and its social consequences. In Walter White's fall from grace as a chemistry teacher, there is a recognition that recreational drugs are the misapplication of a noble calling. The implication is that a small shift in the law (remember prohibition) could make this a respectable business.

The background to this spectacle is international economic negotiations, both the global efforts coordinated by the World Trade Organisation and regional initiatives such as the Transatlantic Trade and Investment Partnership and the Trans-Pacific Partnership. The WTO was created in 1995 as an institutional upgrade on GATT, the General Agreement on Tariffs and Trade, a rolling series of negotiations that was started in 1947 with the aim of avoiding the protectionism and autarky that had scarred the 1930s. Though GATT remains active, in the form of the outstanding Doha round, the global focus has long since shifted from the reduction of tariffs towards the harmonisation of regulations, notably in the areas of commercial services, intellectual copyright and foreign investment. The last GATT agreement before the creation of the WTO was, coincidentally, the Uruguay round, which ran from 1986 to 1994 (the duration, longer than the Congress of Vienna, is indicative of the scope and detail of these negotiations as much as the difficulty in securing agreement).

Despite the regular use of the words "trade" and "tariffs", and the implicit valorisation of "free trade", international agreements since the Uruguay round have had less and less to do with the traditional exchange of raw materials, agricultural produce and manufactured commodities. The objective in the neoliberal age has been to extend the rights of multinational corporations in the areas of intellectual property, investor-state dispute settlement (ISDS, i.e. the rights of foreign investors to trump domestic legislation), and the regulation of regulation (i.e. ensuring that domestic laws are harmonised to the satisfaction of global capital). As Dean Baker says, "the dirty secret about most trade negotiations today is that they aren’t really about 'conventional barriers to trade' any more. 'Non-tariff barriers', which get most of the attention in trade talks these days are a euphemism for differing national approaches to regulation".

While a lot of the criticism directed at these negotiations focuses on the anti-democratic implications of ISDS, the really big issue is intellectual property. The Trans-Pacific Partnership (TTP) is in large part targeted at extending US IP rights in South East Asia, where a large proportion of the world's "knock-offs" currently originate. But the scope of this goes beyond bootleg copies of The Hobbit. As Walter White has shown, we now have the technology to create knock-off drugs. As well as crystal meth, we can safely produce mildly psychoactive agents with minimal harmful effects (certainly less harmful than alcohol). If Big Pharma doesn't do this, then the market will be left to "unregulated" and "unscrupulous" producers in Vietnam and Mexico. It should be obvious that the gradual extension of IP rights is preparation for the decriminalisation of drugs, not to mention the ubiquity of high-profit GM.

There are many who cheer the Uruguay decision because it proposes nationalisation, rather than the regulation of a free market, and thus the adoption of a more socially-embedded response to the collateral damage of the drugs trade, but what they fail to appreciate is that this is only possible because there is no patent on cannabis or THC. In the future, the rights of nation states to manage their drug policy and direct their drug industries will be constrained by the rights of Big Pharma, who will own the "good stuff".

Monday, 9 December 2013

Counting Peanuts in the Monkey House

How much should we pay MPs, given that there is no objective criteria to determine their salaries? There isn't a market that can provide a "clearing price", and the suggestion that candidates should make salary bids part of their election manifesto would institutionalise corruption. Nor is there any obvious measure of productivity, unless you want to encourage a greater output of poorly-scrutinised bills. Even international benchmarks are unhelpful as there are widely differing approaches to pay, expenses and outside earnings. The popular answer is that their pay should reflect our subjective opinion of their relative worth, which is a social judgement, not a market evaluation - i.e. closer to a form of barter than monetary exchange, so one MP equals three estate agents, or 0.75 of a brain surgeon.

This belief is founded on an assumption about class and rank, hence the tendency to use roles with traditional social standing, such as lawyers, doctors and headteachers, as the peer group. The problem is that 30 years of neoliberalism has seen these roles transformed in two ways. First, they have benefited from widening income inequality as part of the upper half of the middle class. As the earnings of the top 1% have accelerated away, this has helped drag the earnings of the next 9% up. Second, they have become more internally polarised due to the increased rewards to "leadership" and the mainstreaming of bonuses. Are MPs now to be compared to corporate lawyers, heads of commissioning GP consortia and academy "superheads", or are they to be compared to legal aid solicitors, locum doctors and the heads of comprehensive community schools?

Fearful of drawing too much attention to the growth in inequality before 2008, all of the parties acquiesced in an informal stitch-up that saw headline pay restrained while incomes were boosted through liberal office allowances, nod-and-a-wink expenses and generous pension contributions. Meanwhile, in the spirit of the age, MPs continued to pursue their outside commercial interests, and in some cases decided to combine the two domains through lucrative lobbying and "cash for questions".

The current thinking of the Independent Parliamentary Standards Authority, which now sets MPs pay, appears to be that it should be linked to average earnings, with a multiple of around 3. This contrasts with a multiple of 5.7 when pay was first introduced in 1911, though this was soon corrected by inflation during WW1. The multiple was around 2.5 during the 1920s, and then wobbled around 3.25 between the mid-30s and the oil shock of 1973. It dropped to 2.5 during the era of high inflation and public pay policies (and after expenses were separated-out in 1971), jumped up to 3 during the Major years, and has gradually declined since then to around 2.7 now (see chart below). On the face of it, they have a reasonable argument for an uprating now to a multiple of 3.

The problem is that "average earnings" in this model is the statistical mean, not the median. In other words, the aggregate of everyone's earnings divided by everyone who works, rather than the point at which 50% of the population earn more and 50% earn less. As the FT noted when this pay rise was first mooted in July, "Mean earnings have grown faster than median earnings since 1980, largely because of higher pay at the top of the income scale. In other words, Ipsa has made its measurement more sensitive to the rise in income inequality". Hooking their pay to median earnings might be fairer in terms of relative worth, but it would condemn MPs to an ongoing decline relative to the top-end of the income scale if inequality continues to rise. The choice of the mean is therefore a vote of no confidence in the prospect of inequality narrowing any time soon.

There are many drivers of unequal earnings growth: technology has increasingly automated median-skill roles, leading to job polarisation; globalisation has created competitive downward pressure on unskilled wages while raising the premium for high-skill roles; and politicians have lightened the tax burden on the wealthy. But perhaps the most emotionally significant for MPs has been the exemplary role of bankers since the 1980s. This has not only set the bar high in London, it has had a cascade effect through related employment sectors such as law and business services, from which MPs are disproportionately drawn. "Why shouldn't we have some of that?" isn't an edifying motive, but it's perfectly understandable.

One of the features of neoliberal corporate practice has been its apparently schizoid attitude towards rank and status. First names are used, ties may be dispensed with, and a flatter organisation chart appears (as mid-tier roles evaporate). Yet at the same time there is greater reliance on independent salary benchmarking (you're no longer just competing with the firm up the road) and executive remuneration committees (whose wisdom is little more than an old saw about peanuts and monkeys). Though this is justified by reference to "paying the going rate", it is clear that the primary driver is status, a social currency, rather than market pricing.

The reported distaste of politicians for the proposed 11% pay increase is not mere hypocrisy; it also reflects embarrassment at the growth in income inequality during the neoliberal era, and a realisation that after the 2008 "setback", this unequal growth has returned and that the chief beneficiaries are many of the same bankers, corporate lawyers and executive chancers who filled their boots the first time round. Galloping price inflation at the top end of the London property market is not just the result of Chinese investors buying up Battersea Power Station for its excellent Feng Shui. The flats there will be very handy for Westminster.

This belief is founded on an assumption about class and rank, hence the tendency to use roles with traditional social standing, such as lawyers, doctors and headteachers, as the peer group. The problem is that 30 years of neoliberalism has seen these roles transformed in two ways. First, they have benefited from widening income inequality as part of the upper half of the middle class. As the earnings of the top 1% have accelerated away, this has helped drag the earnings of the next 9% up. Second, they have become more internally polarised due to the increased rewards to "leadership" and the mainstreaming of bonuses. Are MPs now to be compared to corporate lawyers, heads of commissioning GP consortia and academy "superheads", or are they to be compared to legal aid solicitors, locum doctors and the heads of comprehensive community schools?

Fearful of drawing too much attention to the growth in inequality before 2008, all of the parties acquiesced in an informal stitch-up that saw headline pay restrained while incomes were boosted through liberal office allowances, nod-and-a-wink expenses and generous pension contributions. Meanwhile, in the spirit of the age, MPs continued to pursue their outside commercial interests, and in some cases decided to combine the two domains through lucrative lobbying and "cash for questions".

The current thinking of the Independent Parliamentary Standards Authority, which now sets MPs pay, appears to be that it should be linked to average earnings, with a multiple of around 3. This contrasts with a multiple of 5.7 when pay was first introduced in 1911, though this was soon corrected by inflation during WW1. The multiple was around 2.5 during the 1920s, and then wobbled around 3.25 between the mid-30s and the oil shock of 1973. It dropped to 2.5 during the era of high inflation and public pay policies (and after expenses were separated-out in 1971), jumped up to 3 during the Major years, and has gradually declined since then to around 2.7 now (see chart below). On the face of it, they have a reasonable argument for an uprating now to a multiple of 3.

The problem is that "average earnings" in this model is the statistical mean, not the median. In other words, the aggregate of everyone's earnings divided by everyone who works, rather than the point at which 50% of the population earn more and 50% earn less. As the FT noted when this pay rise was first mooted in July, "Mean earnings have grown faster than median earnings since 1980, largely because of higher pay at the top of the income scale. In other words, Ipsa has made its measurement more sensitive to the rise in income inequality". Hooking their pay to median earnings might be fairer in terms of relative worth, but it would condemn MPs to an ongoing decline relative to the top-end of the income scale if inequality continues to rise. The choice of the mean is therefore a vote of no confidence in the prospect of inequality narrowing any time soon.

There are many drivers of unequal earnings growth: technology has increasingly automated median-skill roles, leading to job polarisation; globalisation has created competitive downward pressure on unskilled wages while raising the premium for high-skill roles; and politicians have lightened the tax burden on the wealthy. But perhaps the most emotionally significant for MPs has been the exemplary role of bankers since the 1980s. This has not only set the bar high in London, it has had a cascade effect through related employment sectors such as law and business services, from which MPs are disproportionately drawn. "Why shouldn't we have some of that?" isn't an edifying motive, but it's perfectly understandable.

One of the features of neoliberal corporate practice has been its apparently schizoid attitude towards rank and status. First names are used, ties may be dispensed with, and a flatter organisation chart appears (as mid-tier roles evaporate). Yet at the same time there is greater reliance on independent salary benchmarking (you're no longer just competing with the firm up the road) and executive remuneration committees (whose wisdom is little more than an old saw about peanuts and monkeys). Though this is justified by reference to "paying the going rate", it is clear that the primary driver is status, a social currency, rather than market pricing.

The reported distaste of politicians for the proposed 11% pay increase is not mere hypocrisy; it also reflects embarrassment at the growth in income inequality during the neoliberal era, and a realisation that after the 2008 "setback", this unequal growth has returned and that the chief beneficiaries are many of the same bankers, corporate lawyers and executive chancers who filled their boots the first time round. Galloping price inflation at the top end of the London property market is not just the result of Chinese investors buying up Battersea Power Station for its excellent Feng Shui. The flats there will be very handy for Westminster.

Saturday, 7 December 2013

An Inspiration to Lawyers Everywhere

The first time I came across the word Apartheid may have been in Arthur C Clarke's 1953 novel, Childhood's End, which I think I read around 11 or 12, so about 1972. Superior aliens, the Overlords, turn up out of the blue to stop the Cold War and save humanity from extinction. They insist that this will only be a watching brief (their shyness is eventually explained by their resemblance to traditional European images of the devil), and that they will keep their interventions to a minimum. The two notable exceptions are to stop the bloody killing of the whites in South Africa, following the collapse of Apartheid a few years earlier, and the bloody killing of bulls in Spain, which Clarke obviously felt had gone on long enough.

South Africa also featured in another SF classic, Michael Moorcock's The Land Leviathan of 1974. In an alternate early twentieth century, the republic is an enlightened outpost of democracy and racial harmony, whose president is the former lawyer, Mohandas K Gandhi. Moorcock's Oswald Bastable books are now seen through the prism of what would subsequently be pigeon-holed as Steampunk, and consequently works of techno-whimsy, but they were actually a satire on colonialism and the compromises that liberalism makes with it. Though set in an Edwardian world of imperial self-confidence and Fabian social progress, the critique had a sharp, contemporary resonance in the 70s when the reactionary right still urged that we should sympathise with the predicament of a white minority faced by communist encirclement without and the "immaturity" of blacks within.

The transformation of the ANC from part of the problem to the basis of the solution is now attributed to the dignity and forbearance of Mandela and his imprisoned colleagues, aided and abetted by the wider anti-apartheid movement, but this was actually the product of more profound forces, notably the global triumph of neoliberalism. I recall meeting a South African businessman in the early 80s who assured me that change was inevitable, partly because disinvestment and sanctions were hurting, but more because the inefficiencies of the system were holding back capital. Apartheid prevented the growth of a larger consumer society, and it stopped industry making full use of the available talent. It just wasn't good business. While the Afrikaaner small capitalists, farmers and state functionaries were in two or three minds, symbolised by the lunacy of the Bantustan strategy and the AWB, the predominantly "anglo" big capitalists were largely reconciled to the inevitability of majority rule after the Soweto Uprising in 1976. It was a matter of cutting a deal that would keep the country open to international capital and marginalise the SACP.

In 1982 Mandela was moved from Robben Island to Pollsmoor Prison, which (it subsequently transpired) was the first fruit of the unofficial negotiations opened between the Apartheid regime and the ANC that would culminate in his release in 1990. Exploratory discussions between "people of goodwill", whether through deniable back channels or semi-official "Track II" NGOs, is a key modus operandi of neoliberalism. Where the 50s and 60s had been marked by a reluctance to talk except under duress, symbolised by the absurd "hot line" (and parodied by the Batphone), the era since the 70s has been one of promiscuous chat on the back of increased trade and travel, improved communications technology and globalisation. The strong commercial slant has fed back into the language of politics and diplomacy, thus "conferences" and "treaties" have been updated to "talks" and "deals", and the official products of negotiation are often aspirational and hazy: words like "openness", "reconciliation" and "commitment" feature a lot. The real promise is always more talks, more chat, more sidebar business opportunities.

From our vantage point today, it is clear that big capital was the winner in South Africa in the 90s and 00s. An inefficient and debilitating racial divide was replaced by a more efficient but equally debilitating class divide. In some respects, the fate of the ANC was the result of its leaders being lawyers, very much in the tradition of the Edwardian Gandhi, if not exclusively committed to non-violence. At the same time that Algeria was undergoing a bloody war of independence, the ANC was fighting the long drawn out treason trial of 1956-61. It is little remembered now, but the founding of the Pan-African Congress in 1959, and the Sharpeville massacre in 1960, were seen by many contemporaries as reproaches to the strategy of the ANC. Paradoxically, the jailing of Mandela and other ANC leaders after the Rivonia trial in 1963 reinforced their pre-eminent role in the struggle. Had they been released, they might have been marginalised by more militant elements in the townships.

As the needs of capital increasingly pointed towards the dismantling of Apartheid, Mandela increasingly became a symbol of hope and his eventual release a promissory note of change, but with the specifics left suitably vague. The deferred gratification of "hope" was a leitmotif of the times, from Berlin in 1989, through New Labour in 1997, to Obama in 2008. Since then, we have realised the extent to which neoliberal society was based on illusory hope: that incomes and house prices would keep on rising, that education would pay, that ability would determine success. One of the best films of the immediate post-crash era was 2009's District 9. Though most people interpreted it as a specific parable of Apartheid, it was actually a universal parable of class and its fragility, with the white protagonist's accidental infection, and the instrumental attitude of his employer and family, forcing him into the underclass as he transforms into an alien "prawn". Hope had turned to fear.

When I first saw the film, I recalled Childhood's End because of the South African connection and the hovering mother ship, though the aliens are quite different. Whereas the "prawns" of District 9 are troublesome proles, the Overlords can be read as a prescient metaphor of neoliberal interventionism (the image of Tony Blair as a horned devil will obviously please some). Michael Moorcock's vision of an alternate South Africa was obviously ironic, but in one respect he too was prescient in imagining a society whose figurehead and moral compass was a crusading lawyer. What he perhaps didn't anticipate is that it would be the corporate lawyers who would ultimately be the power behind the throne.

South Africa also featured in another SF classic, Michael Moorcock's The Land Leviathan of 1974. In an alternate early twentieth century, the republic is an enlightened outpost of democracy and racial harmony, whose president is the former lawyer, Mohandas K Gandhi. Moorcock's Oswald Bastable books are now seen through the prism of what would subsequently be pigeon-holed as Steampunk, and consequently works of techno-whimsy, but they were actually a satire on colonialism and the compromises that liberalism makes with it. Though set in an Edwardian world of imperial self-confidence and Fabian social progress, the critique had a sharp, contemporary resonance in the 70s when the reactionary right still urged that we should sympathise with the predicament of a white minority faced by communist encirclement without and the "immaturity" of blacks within.

The transformation of the ANC from part of the problem to the basis of the solution is now attributed to the dignity and forbearance of Mandela and his imprisoned colleagues, aided and abetted by the wider anti-apartheid movement, but this was actually the product of more profound forces, notably the global triumph of neoliberalism. I recall meeting a South African businessman in the early 80s who assured me that change was inevitable, partly because disinvestment and sanctions were hurting, but more because the inefficiencies of the system were holding back capital. Apartheid prevented the growth of a larger consumer society, and it stopped industry making full use of the available talent. It just wasn't good business. While the Afrikaaner small capitalists, farmers and state functionaries were in two or three minds, symbolised by the lunacy of the Bantustan strategy and the AWB, the predominantly "anglo" big capitalists were largely reconciled to the inevitability of majority rule after the Soweto Uprising in 1976. It was a matter of cutting a deal that would keep the country open to international capital and marginalise the SACP.

In 1982 Mandela was moved from Robben Island to Pollsmoor Prison, which (it subsequently transpired) was the first fruit of the unofficial negotiations opened between the Apartheid regime and the ANC that would culminate in his release in 1990. Exploratory discussions between "people of goodwill", whether through deniable back channels or semi-official "Track II" NGOs, is a key modus operandi of neoliberalism. Where the 50s and 60s had been marked by a reluctance to talk except under duress, symbolised by the absurd "hot line" (and parodied by the Batphone), the era since the 70s has been one of promiscuous chat on the back of increased trade and travel, improved communications technology and globalisation. The strong commercial slant has fed back into the language of politics and diplomacy, thus "conferences" and "treaties" have been updated to "talks" and "deals", and the official products of negotiation are often aspirational and hazy: words like "openness", "reconciliation" and "commitment" feature a lot. The real promise is always more talks, more chat, more sidebar business opportunities.

From our vantage point today, it is clear that big capital was the winner in South Africa in the 90s and 00s. An inefficient and debilitating racial divide was replaced by a more efficient but equally debilitating class divide. In some respects, the fate of the ANC was the result of its leaders being lawyers, very much in the tradition of the Edwardian Gandhi, if not exclusively committed to non-violence. At the same time that Algeria was undergoing a bloody war of independence, the ANC was fighting the long drawn out treason trial of 1956-61. It is little remembered now, but the founding of the Pan-African Congress in 1959, and the Sharpeville massacre in 1960, were seen by many contemporaries as reproaches to the strategy of the ANC. Paradoxically, the jailing of Mandela and other ANC leaders after the Rivonia trial in 1963 reinforced their pre-eminent role in the struggle. Had they been released, they might have been marginalised by more militant elements in the townships.

As the needs of capital increasingly pointed towards the dismantling of Apartheid, Mandela increasingly became a symbol of hope and his eventual release a promissory note of change, but with the specifics left suitably vague. The deferred gratification of "hope" was a leitmotif of the times, from Berlin in 1989, through New Labour in 1997, to Obama in 2008. Since then, we have realised the extent to which neoliberal society was based on illusory hope: that incomes and house prices would keep on rising, that education would pay, that ability would determine success. One of the best films of the immediate post-crash era was 2009's District 9. Though most people interpreted it as a specific parable of Apartheid, it was actually a universal parable of class and its fragility, with the white protagonist's accidental infection, and the instrumental attitude of his employer and family, forcing him into the underclass as he transforms into an alien "prawn". Hope had turned to fear.

When I first saw the film, I recalled Childhood's End because of the South African connection and the hovering mother ship, though the aliens are quite different. Whereas the "prawns" of District 9 are troublesome proles, the Overlords can be read as a prescient metaphor of neoliberal interventionism (the image of Tony Blair as a horned devil will obviously please some). Michael Moorcock's vision of an alternate South Africa was obviously ironic, but in one respect he too was prescient in imagining a society whose figurehead and moral compass was a crusading lawyer. What he perhaps didn't anticipate is that it would be the corporate lawyers who would ultimately be the power behind the throne.

Wednesday, 4 December 2013

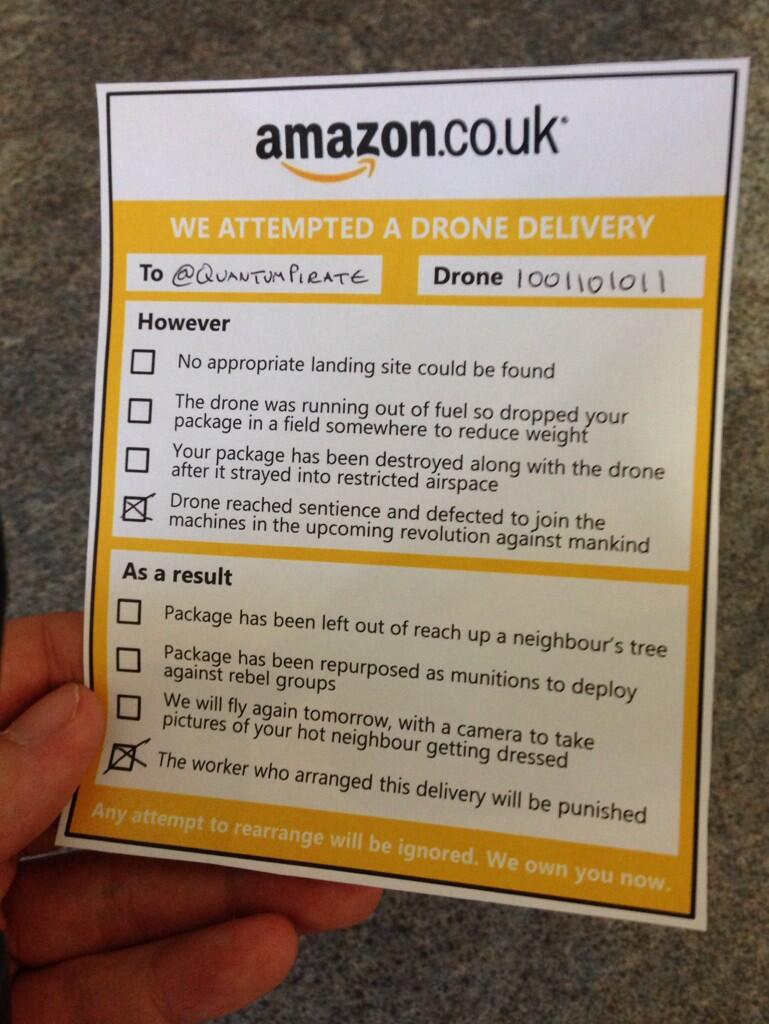

Drone Alone for Christmas

The announcement that Amazon are thinking about using light-weight drones for deliveries has been variously dismissed as a stunt to boost pre-Christmas sales, a distraction from their dodgy record on employee conditions and tax avoidance, and a rather laboured geek joke. Predictably, various "business commentators" and "legal experts" have taken the proposal seriously and started to opine about its feasibility and impact. Equally predictably, the Interwebs have had a field-day pointing out the many and various problems, from Americans shooting them out of the sky in defence of their constitutional rights, to over-eager family pets colliding with rotor blades. First prize goes to this little beauty:

The Amazon announcement is just a bit of nonsense at this stage, but the response to it indicates the extent to which we have already become reconciled to the idea that drones will be whizzing rounds our skies in the near future. In practice, their main non-military use will be as mobile CCTV. They're not well-suited to delivering bottles of wine, or getting close to humans, but they're excellent for surveillance.

The drone is also emblematic of Amazon's intention to automate as much of their operation as possible. The shitty terms and conditions of their distribution staff is simply a reflection of that staff's planned obsolescence. Amazon are not a "value-add" business, in the sense that they can charge an increment on costs for a better service. Despite all the paeans to their convenience, online shoppers want their goods cheaper than they would find in bricks-n-mortar shops. Consequently, Amazon must leverage their size to drive down wholesale prices, pare overheads (mainly distribution) to the bone, and encourage volume purchases (the marginal profit on the second or third item in a delivery is greater than the first).

Given this business model, drones are a poor investment. While they appear to reduce the need for a delivery guy, this will simply shift the cost for labour elsewhere, i.e. to more expensive drone operators or mechanics. The systems could be designed to be wholly autonomous, which is feasible in terms of avoiding other drones and reaching a GPS-guided location, but this would present major issues on arrival where all possible obstacles could not be planned for (e.g. getting in to a block of flats, avoiding that yapping dog etc). Drones also lack the carrying capacity required to reduce overheads, unless they are scaled up to a level where fuel costs would make them more expensive than a road vehicle. The truth is that a van and a driver will remain a better choice for a long time to come.

If you abstract the Amazon model to purchase-pick-delivery, then they have automated purchase (through a website) and are well on the way to automating picking (i.e. what happens in their distribution centres). The stage least viable for automation, because it contains the point where unpredictable interaction with the buyer is inescapable, is delivery. The logical approach here would be to outsource as much of this to the buyer as possible. For that reason, Amazon's use of pick-up points and low-tech lockers is probably more significant than their championing of drones.

The Amazon announcement is just a bit of nonsense at this stage, but the response to it indicates the extent to which we have already become reconciled to the idea that drones will be whizzing rounds our skies in the near future. In practice, their main non-military use will be as mobile CCTV. They're not well-suited to delivering bottles of wine, or getting close to humans, but they're excellent for surveillance.

The drone is also emblematic of Amazon's intention to automate as much of their operation as possible. The shitty terms and conditions of their distribution staff is simply a reflection of that staff's planned obsolescence. Amazon are not a "value-add" business, in the sense that they can charge an increment on costs for a better service. Despite all the paeans to their convenience, online shoppers want their goods cheaper than they would find in bricks-n-mortar shops. Consequently, Amazon must leverage their size to drive down wholesale prices, pare overheads (mainly distribution) to the bone, and encourage volume purchases (the marginal profit on the second or third item in a delivery is greater than the first).

Given this business model, drones are a poor investment. While they appear to reduce the need for a delivery guy, this will simply shift the cost for labour elsewhere, i.e. to more expensive drone operators or mechanics. The systems could be designed to be wholly autonomous, which is feasible in terms of avoiding other drones and reaching a GPS-guided location, but this would present major issues on arrival where all possible obstacles could not be planned for (e.g. getting in to a block of flats, avoiding that yapping dog etc). Drones also lack the carrying capacity required to reduce overheads, unless they are scaled up to a level where fuel costs would make them more expensive than a road vehicle. The truth is that a van and a driver will remain a better choice for a long time to come.

If you abstract the Amazon model to purchase-pick-delivery, then they have automated purchase (through a website) and are well on the way to automating picking (i.e. what happens in their distribution centres). The stage least viable for automation, because it contains the point where unpredictable interaction with the buyer is inescapable, is delivery. The logical approach here would be to outsource as much of this to the buyer as possible. For that reason, Amazon's use of pick-up points and low-tech lockers is probably more significant than their championing of drones.

Sunday, 1 December 2013

Secular Stagnation as a Software Glitch

The big noise in the econoblogosphere over the last fortnight has been the reaction to Larry Summers' reintroduction of the concept of "secular stagnation", the idea that all is not well with capitalism and that we may need to get used to low growth and persistent unemployment (or underemployment). Summers first notes a dog-that-didn't-bark oddity of the economy prior to the 2008 crisis: "Too easy money, too much borrowing, too much wealth. Was there a great boom? Capacity utilization wasn't under any great pressure. Unemployment wasn't under any remarkably low level. Inflation was entirely quiescent. So somehow, even a great bubble wasn't enough to produce any excess in aggregate demand". In other words, the new economy was a bit pants.

One could arguably extend Summers' description across the entire period of the "Great Moderation", back to the mid-80s. Though there was volatility in specific assets and interest rates, due to well-known local conditions (e.g. UK house prices and interest rates in the early 90s, the US dotcom boom in the late 90s etc), volatility at the macroeconomic level, i.e. GDP and inflation, was low. The industrial restructuring of the early 80s did not lead to a step-up in GDP growth across the developed world (let alone wealth "trickle-down"), but rather a regression to the postwar mean (2.6% in the UK), while unemployment stayed high. If we manage to hit that rate of growth in the UK by 2018, ten years after the crash, it will be hailed as a triumph.

Summers then turns to another puzzle, the aftermath of the successful attempts in 2009 to "normalise" the financial system: "You'd kind of expect that there'd be a lot of catch-up: that all the stuff where inventories got run down would get produced much faster, so you'd actually kind of expect that once things normalized, you'd get more GDP than you otherwise would have had -- not that four years later, you'd still be having substantially less than you had before. So there's something odd about financial normalization, if that was what the whole problem was, and then continued slow growth". In other words, where was the bounce back once Gordon & co saved the world?

The concept of secular stagnation was originally popularised by the US economist Alvin Hansen in the 1930s as "sick recoveries which die in their infancy and depressions which feed on themselves and leave a hard and seemingly immovable core of unemployment" (he was observing the petering-out of the New Deal recovery in 1937 and couldn't anticipate the impact that the coming war would have). The assumption behind this was that the motors of economic expansion, such as rapid population growth, the development of new territory and new resources, and rapid technological progress, had played out. Consequently, the upswing of the business cycle lacked momentum. This finds an echo in modern "stagnationist" theories like those of Tyler Cowen ("no more low-hanging fruit") and Robert Gordon ("modern technology is rubbish" - I paraphrase).

The origin of Hansen's thinking lay in Keynes's observation that net saving at full employment tends to grow, whereas net investment at full employment tends to fall. This is Keynes's justification for government to act as the investor of last resort, thereby maintaining aggregate demand and full employment. The socialisation of investment is back on the agenda, even if the S-word is to be avoided and pro-middle class projects (like HS2 and Help to Buy) preferred.